Ray tracing theory is simple, but I’ll explain it anyway. Ray tracing is a generic technique, that can be applied to a lot of different things. I’m applying it to one thing- graphics.

(If you want a better overview than this, look elsewhere. Look at wikipedia. Ask someone.)

Simulating light is far too complex to be done “properly”. If you think about the sheer number of photons that are output by a light source (the sun), and the number of bounces each photon goes through, depositing energy each time it does, before finally arriving at your eye, or camera, you’d see why it’s not possible. At least, not at the moment. If instead, you ignore light being emitted from the sun, and instead trace the photons backwards- from your eye, into the world, you are faced with a much easier challenge.

**Some notation. I’ll be using the word eye and camera interchangeably. When I use the word “scene” I’m refering to the environment that the ray’s interact with.**

If you know you wish to render an image of 800 by 600 pixels, that’s 800*600 rays you have to trace.

- For each pixel, shoot a ray into the scene.

- Test the ray for intersections with every object in the scene.

- Find the closest intersection.

- Find the colour of that pixel.

- Rinse and repeat, until each pixel has been done.

Object Intersections.

The simplest kind of object to test a ray against is a sphere. This is nice, because real-time applications running in OpenGL or DirectX fail miserably at perfect spheres. They have to be rendered as a flat sided object, with lots and lots of sides.

Ah yes – some maths.

A ray can be defined as two vectors: an origin and a direction vector. The direction vector should be a unit vector.

ray = origin + direction

A sphere can be defined as position vector (which describes the pos of the centre) and a radius. That’s all:

sphere = centre + radius

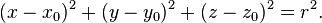

A sphere with centre (x0, y0, z0) and radius r is the locus of all points (x, y, z) such that

Finding the Intersection:

A set of points on a ray can be defined by:

Ray(t) = ray(origin) + ray(direction) * t

Where t is the distance from the ray origin.

The ray equation can be substituted into the sphere equation, and solved to find t.

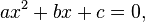

This takes the form of a quadratic equation. If you don’t remember quadratic equations from whatever secondary school maths equation, they take this form:

So, substitution for ray:

X = X(ray(origin)) + X(ray(direction)) * t

Y = Y(ray(origin)) + Y(ray(direction)) * t

Z = Z(ray(origin)) + Z(ray(direction)) * t

Insert X, Y, Z into sphere equation:

(X)2 + (Y)2 + (Z)2 = R2

Solve this quadratically, to find t.

There. That was easy.

So, the first thing I did was to define a scene composed of a single sphere, no lights, and shot rays at it through an orthographic camera. All this accomplished was a sense of self satisfaction, as I watched some text in my console, proving that some pixels came back as “hit”, and some came back “miss”. At this point I had no way to show the results on screen.

I could show you the results of this…..but why?

The second thing I did was start hunting around for a way to display my output. Image libraries were out- I thought they might be too complex to learn to use. I simply wanted to define a window of the correct size, and use something like “setPixel”. I eventually, after much google-fu, gave up and used the windows API instead. This felt like a small betrayal (I’m mostly a linux user for coding). It did however allow me to produce this:

Ah. A black screen.

After some more fiddling, this happened:

Hurrah! I had ray-traced my first scene.

Admittedly, is has no lighting, no shading, no support for more than a single sphere, no support for anything other than spheres……The list of things it doesn’t do goes on.

It DOES get better.